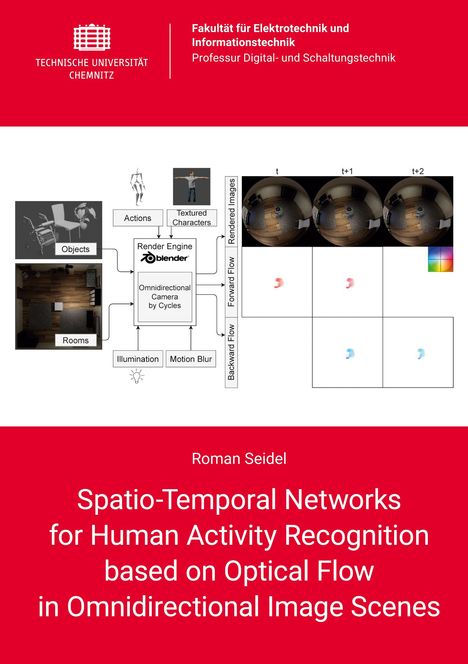

Roman Seidel: Spatio-Temporal Networks for Human Activity Recognition based on Optical Flow in Omnidirectional Image Scenes

Spatio-Temporal Networks for Human Activity Recognition based on Optical Flow in Omnidirectional Image Scenes

Buch

lieferbar innerhalb 2-3 Wochen

(soweit verfügbar beim Lieferanten)

(soweit verfügbar beim Lieferanten)

EUR 21,90*

Verlängerter Rückgabezeitraum bis 31. Januar 2025

Alle zur Rückgabe berechtigten Produkte, die zwischen dem 1. bis 31. Dezember 2024 gekauft wurden, können bis zum 31. Januar 2025 zurückgegeben werden.

- Technische Universität Chemnitz, 03/2024

- Einband: Kartoniert / Broschiert, Paperback

- Sprache: Englisch

- ISBN-13: 9783961002054

- Bestellnummer: 11786340

- Umfang: 212 Seiten

- Gewicht: 314 g

- Maße: 210 x 148 mm

- Stärke: 14 mm

- Erscheinungstermin: 1.3.2024

Achtung: Artikel ist nicht in deutscher Sprache!

Klappentext

The property of human motion perception is used in this dissertation to infer human activity from data using artificial neural networks. One of the main aims of this thesis is to discover which modalities, namely RGB images, optical flow and human keypoints, are best suited for HAR in omnidirectional data. Since these modalities are not yet available for omnidirectional cameras, they are synthetically generated with a 3D indoor simulation with the result of a large-scale dataset, called OmniFlow. Due to the lack of omnidirectional optical flow data, the OmniFlow dataset is validated using Test-Time Augmentation. Compared to the baseline, which contains Recurrent All-Pairs Field Transforms trained on the FlyingChairs and FlyingThings3D datasets, it was found that only about 1000 images need to be used for fine-tuning to obtain a very low End-point Error. For an evaluation on activity-level, two state-of-the-art convolutional neural networks (CNNs), namely the Temporal Segment Network (TSN) for the modalities RGB images and optical flow and the PoseC3D for the modality human keypoints, were used. Both CNNs were trained and validated on OmniFlow and on the real-world dataset OmniLab. For both networks, TSN and PoseC3D, three hyperparameters were varied and the top-1, top-5 and mean accuracies were reported. In addition, confusion matrices indicating the class-wise accuracy of the 15 activity classes have been given for the modalities RGB images, optical flow and human keypoints.Anmerkungen:

Bitte beachten Sie, dass auch wir der Preisbindung unterliegen und kurzfristige Preiserhöhungen oder -senkungen an Sie weitergeben müssen.